Big Data: Unveiling the Power of Data Analytics

In today’s technology-driven world, the term “data” has evolved from a mere buzzword to a crucial component that drives the success of modern businesses and industries. The emergence of Big Data, with its massive volumes and intricate complexities, has opened up new and unprecedented opportunities for organizations to extract valuable insights and make informed decisions that can transform their operations. In this article, we will delve deeply into the world of Big Data, exploring its definition, evolution, and the enormous impact it has had across diverse sectors.

Defining Big Data

Big Data refers to large, complex datasets that cannot be effectively managed or analyzed using traditional data processing tools. These datasets are characterized by the four Vs: Volume, Variety, Velocity, and Veracity. Volume refers to the sheer amount of data generated, Variety encompasses the different types and sources of data, Velocity represents the speed at which data is generated and processed, and Veracity pertains to the reliability and accuracy of the data.

The Evolution of Big Data

The idea of Big Data has been around for a while now, dating back to the early days of computing when organizations first began dealing with large volumes of data. However, in recent decades, technological advancements such as the widespread use of the internet, the popularity of social media, and the emergence of IoT devices have led to an unprecedented surge in data generation. As a result, new tools and techniques have had to be developed to handle, organize, and analyze data on a massive scale.

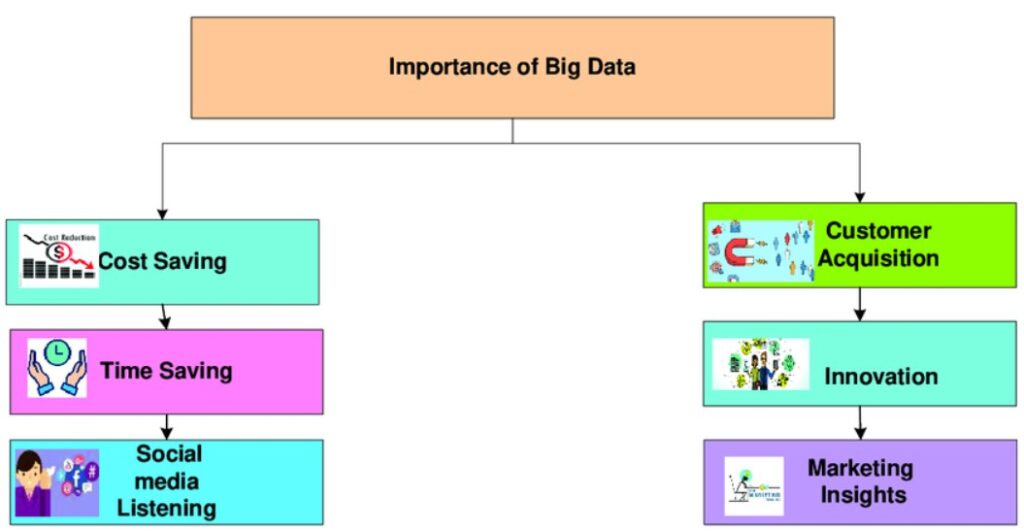

The Importance of Big Data

One of the primary benefits of Big Data is its ability to provide organizations with valuable insights that drive strategic decision-making. By analyzing large datasets, businesses can uncover hidden patterns, trends, and correlations that offer valuable insights into customer behavior, market trends, and competitive landscapes. These insights empower organizations to make data-driven decisions that enhance operational efficiency, optimize processes, and drive business growth.

Enhancing Customer Experiences

The utilization of Big Data is of paramount importance in improving customer experiences. It enables businesses to establish personalized interactions and targeted marketing efforts. By analyzing customer data, organizations can gain a deeper understanding of individual preferences, behaviors, and needs. This insight facilitates the delivery of customized products, services, and marketing messages that are more likely to resonate with the intended audience. As a result, businesses can expect to see increased customer engagement, loyalty, and satisfaction.

Webflow Tutorials: Mastering Website Design Without Code

Fueling Innovation

In addition to driving operational efficiencies and improving customer experiences, Big Data fuels innovation by powering research and development efforts and facilitating new product development. By analyzing data from various sources, organizations can identify emerging trends, market gaps, and customer needs, guiding the development of innovative products and services that address these demands. This iterative process of innovation enables organizations to stay ahead of the competition and drive market disruption.

Challenges of Big Data

One of the biggest challenges associated with Big Data is storing and managing large volumes of data effectively. Traditional data storage solutions often struggle to scale and accommodate the exponential growth of data, leading to issues such as data silos, latency, and inefficiencies. To address these challenges, organizations are turning to advanced data storage technologies such as Data Lakes and Data Warehouses, which offer scalable, cost-effective solutions for storing and managing Big Data.

Data Quality and Integrity

In the realm of Big Data, maintaining high data quality and integrity is a crucial challenge. As Big Data often contains vast amounts of information, it is common to have errors, inconsistencies, and incomplete data. This can pose a significant threat to the accuracy and dependability of analysis results. Therefore, organizations must invest in data cleaning and preprocessing techniques such as data deduplication, normalization, and validation to ensure data quality. Furthermore, it is essential to implement robust data governance frameworks and quality assurance processes to maintain data integrity throughout its lifecycle.

Privacy and Ethical Concerns

With the increasing use of Big Data comes growing concerns about data privacy and ethical considerations. As organizations collect and analyze vast amounts of data about individuals, there’s a heightened risk of privacy breaches, data misuse, and ethical dilemmas. To address these concerns, governments and regulatory bodies have implemented data privacy regulations such as GDPR and CCPA, which impose strict requirements on organizations regarding data collection, storage, and usage. Additionally, organizations must adhere to ethical guidelines and principles to ensure the responsible and ethical use of data.

Applications of Big Data

Big Data has a wide range of applications across various industries, from healthcare and finance to retail and manufacturing. In healthcare, for example, Big Data analytics is used to analyze patient data, identify disease patterns, and improve treatment outcomes. In finance, Big Data is used for fraud detection, risk management, and algorithmic trading. In retail, Big Data powers personalized recommendations, demand forecasting, and supply chain optimization, among other applications.

Government and Public Sector

In the public sector, Big Data is driving innovation and efficiency across various domains, including smart cities, law enforcement, and public health. In smart cities, for instance, Big Data analytics is used to optimize transportation systems, improve urban planning, and enhance public services. In law enforcement, Big Data helps agencies analyze crime patterns, predict criminal activity, and allocate resources effectively. In public health, Big Data is used for disease surveillance, outbreak detection, and epidemiological research.

Hadoop Big Data: The Landscape of Large-Scale Data Processing

Research and Academia

In research and academia, Big Data is revolutionizing scientific research, education, and knowledge discovery. In scientific research, Big Data analytics is used to analyze large datasets, simulate complex systems, and uncover new insights in fields such as genomics, astronomy, and climate science. In education, Big Data is used to personalize learning experiences, track student performance, and inform pedagogical strategies. Additionally, Big Data is used for knowledge discovery, enabling researchers to uncover hidden patterns, trends, and relationships in vast amounts of data.

Big Data Technologies and Tools

To address the challenges of storing and managing Big Data, organizations are turning to advanced data storage solutions such as Data Lakes and Data Warehouses. Data Lakes provides a centralized repository for storing structured and unstructured data at scale, while Data Warehouses offer a structured, schema-on-write approach for storing and querying structured data. These solutions enable organizations to store, manage, and analyze large volumes of data efficiently, driving insights and innovation.

Data Processing Frameworks

In addition to data storage solutions, organizations rely on data processing frameworks to analyze and extract insights from Big Data. Two popular frameworks in this space are Hadoop and Spark. Hadoop is an open-source framework that enables distributed processing of large datasets across clusters of computers, while Spark is a fast and general-purpose cluster computing system that provides in-memory processing capabilities. These frameworks offer powerful tools and libraries for data processing, analytics, and machine learning, enabling organizations to derive actionable insights from Big Data.

Data Visualization Tools

Data visualization is a critical aspect of dealing with large and complex data sets as it helps in comprehending the data and presenting insights more understandably. To make this happen, organizations use various data visualization tools, such as Tableau and Power BI. These tools allow users to create interactive dashboards, charts, and graphs that manifest data patterns, trends, and relationships in a visual manner. Visualizing data assists organizations in gaining deeper insights, identifying actionable trends, and conveying findings to stakeholders more effectively.

Implementing Big Data Projects

Successful implementation of Big Data projects requires careful planning and strategy. Organizations must clearly define their objectives, identify key stakeholders, and establish metrics for success. By setting clear goals and objectives, organizations can align their Big Data initiatives with business priorities and ensure that they deliver tangible value.

Data Collection and Integration

Once the objectives are defined, organizations must focus on data collection and integration. This involves identifying relevant data sources, collecting data from disparate sources, and integrating data into a centralized repository. Data pipelines are used to automate the process of ingesting, cleaning, and transforming data, ensuring that it is ready for analysis.

Big Query: Unraveling the Power of Google’s Data Warehouse

Analysis and Insights

After collecting and integrating data, businesses, and organizations can move on to analyze the data and draw valuable insights from it. To achieve this, they employ various techniques such as Exploratory Data Analysis (EDA) to uncover patterns, trends, and relationships in the data. Additionally, predictive analytics techniques are used to forecast future outcomes and make informed decisions. By utilizing these techniques, organizations can gain a better understanding of their data and use it to drive strategic and informed decision-making processes.

Deployment and Monitoring

Finally, organizations must deploy the insights derived from Big Data analysis into action and continuously monitor their impact. This involves integrating insights into business processes and workflows, implementing changes based on insights, and monitoring key metrics to track performance and effectiveness. Continuous improvement is essential to ensure that Big Data initiatives deliver ongoing value and drive business success.

Big Data Security and Compliance

In today’s world of Big Data, organizations face numerous security and compliance challenges. To tackle these challenges, it is imperative to have a comprehensive and well-established data governance framework in place. Such a framework lays out clear policies, procedures, and guidelines for data management. This ensures that data is handled securely and ethically at every stage of its lifecycle. By adopting effective data governance structures, organizations can significantly reduce risks and ensure compliance with regulatory requirements.

Data Protection Measures

In addition to governance frameworks, organizations must implement data protection measures to safeguard sensitive information and mitigate security risks. Encryption technologies are used to encrypt data both in transit and at rest, ensuring that it remains confidential and secure. Access control mechanisms are used to restrict access to data based on user roles and permissions, preventing unauthorized access and data breaches.

Risk Management

Effective risk management is essential for identifying and mitigating potential threats to Big Data security. Organizations must conduct risk assessments to identify vulnerabilities and threats, prioritize risks based on their severity and impact, and implement controls and safeguards to mitigate risks. Incident response plans are developed to outline procedures for responding to security incidents, minimizing their impact, and restoring normal operations quickly.

Future Trends in Big Data

One of the most significant trends shaping the future of Big Data is the integration of artificial intelligence (AI) and machine learning (ML) technologies. AI and ML algorithms are increasingly being used to automate data analysis, identify patterns, and derive insights from Big Data. These technologies enable organizations to extract value from large and complex datasets more efficiently and make better-informed decisions.

Edge Computing

Another emerging trend in BigData is the rise of edge computing, which involves processing data closer to its source, at the edge of the network. By moving computation closer to data sources, edge computing reduces latency, improves response times, and conserves bandwidth. This is particularly beneficial for applications that require real-time data processing and low latency, such as IoT devices, autonomous vehicles, and industrial automation systems.

Quantum Computing

Looking further ahead, quantum computing holds the potential to revolutionize BigData analytics by enabling advanced data processing capabilities that are beyond the reach of classical computers. Quantum computers leverage the principles of quantum mechanics to perform complex calculations and solve optimization problems at unprecedented speeds. This opens up new possibilities for solving complex problems in areas such as cryptography, optimization, and drug discovery, unlocking new frontiers in BigData analytics.

Conclusion

Big Data is a significant change in the way organizations manage and analyze data. It provides valuable insights and supports strategic decision-making and innovation. However, there are challenges related to data storage, quality, security, and compliance. Emerging technologies, such as AI, edge computing, and quantum computing, promise to transform BigData analytics. Leveraging Big Data effectively can help organizations stay ahead of the curve and unlock new possibilities for success in the digital age.